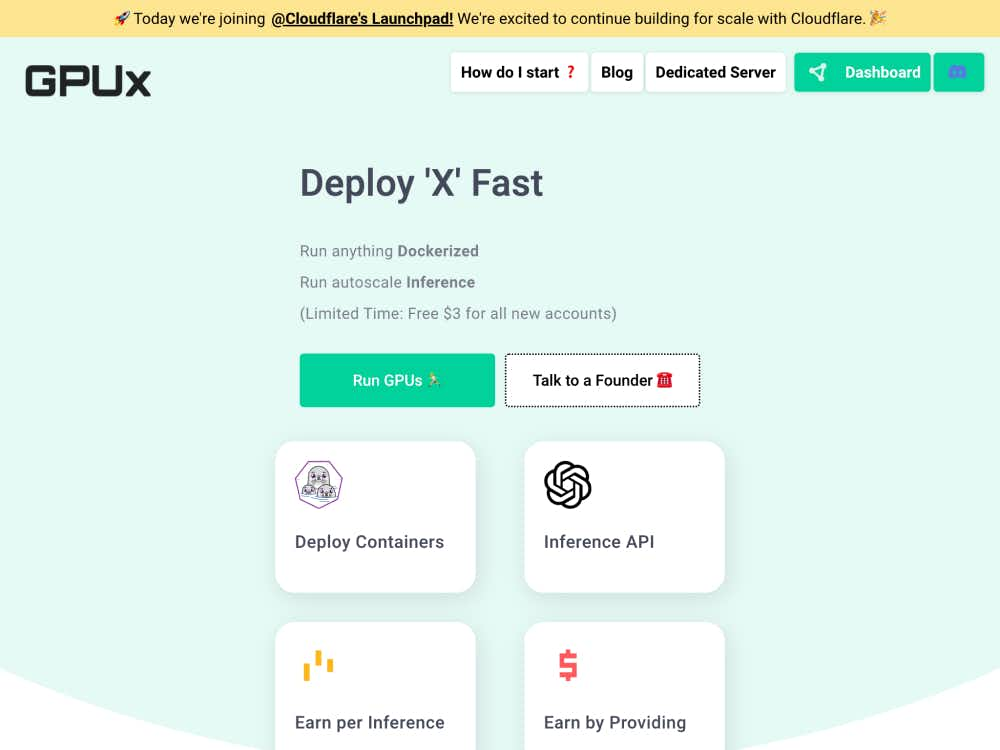

GPUx

GPUx offre une inférence sans serveur ultra-rapide pour les modèles d'IA tels que Stable Diffusion et Whisper. Déployez instantanément une IA optimisée par GPU avec des démarrages à froid en une seconde, le partage peer-to-peer et la prise en charge des modèles privés.

À propos de GPUx

Déployez des modèles d'IA à la vitesse de l'éclair

GPUx est une plateforme d'inférence GPU sans serveur conçue pour simplifier et accélérer le déploiement de l'IA. Que vous utilisiez Stable Diffusion, ESRGAN, Alpaca ou Whisper, GPUx vous permet de lancer des modèles en quelques secondes sans gérer d'infrastructure.

Démarrage à froid en 1 seconde pour une IA instantanée

Le temps est un facteur crucial lors du déploiement d'applications d'IA. GPUx minimise la latence grâce à des démarrages à froid en 1 s, garantissant ainsi une disponibilité quasi instantanée de votre modèle. Idéale pour les environnements de production ou le prototypage rapide, cette vitesse fait toute la différence pour les développeurs et les équipes IA-first.

Comment fonctionne GPUx

Inférence GPU sans serveur

GPUx propose un modèle sans serveur qui lance dynamiquement les instances GPU uniquement lorsque cela est nécessaire. Ce modèle réduit les coûts tout en offrant une puissance de calcul haute performance pour les tâches d'inférence. Des modèles comme Stable Diffusion XL ou Whisper peuvent être appelés via de simples requêtes API, sans aucune surcharge.

Partage de modèles entre pairs

Les organisations peuvent vendre l'accès à leurs modèles privés via GPUx. Cela crée un marché pour l'inférence GPU et permet aux équipes de monétiser leurs charges de travail de machine learning tout en gardant le contrôle.

Key Features

Exécuter des modèles d'IA populaires

GPUx prend en charge une variété de modèles d'apprentissage automatique largement utilisés :

- StableDiffusionXL pour la génération d'images de haute qualité

- ESRGAN pour la mise à l'échelle des images

- Whisper pour la transcription de la parole en texte

- Alpaca pour l'IA conversationnelle

Accès au volume et prise en charge en lecture/écriture

Pour les modèles nécessitant un accès persistant aux données, GPUx prend en charge les volumes en lecture/écriture. Il est donc idéal pour les pipelines complexes, notamment ceux impliquant des E/S basées sur des fichiers ou des points de contrôle de modèle.

Environnement convivial pour les développeurs

GPUx propose des outils CLI, une intégration GitHub et des exemples de cas d'utilisation via son blog pour aider les développeurs à démarrer rapidement. Sans avoir à gérer de GPU, la plateforme gère l'orchestration, vous permettant ainsi de vous concentrer sur les performances et la livraison des modèles.

Use Cases

Inférence rapide pour l'IA générative

Exécutez des modèles texte-image en quelques secondes avec Stable Diffusion XL. Idéal pour les outils de création, le prototypage visuel et les plateformes de génération d'images.

Transcription de discours

Déployez des modèles Whisper pour la transcription audio en temps réel ou par lots. Idéal pour créer des applications de conversion de la parole en texte sans les coûts ni la complexité liés à la maintenance de serveurs GPU.

Mise à l'échelle et amélioration

Utilisez ESRGAN pour améliorer la qualité des vidéos ou des images. GPUx permet un fonctionnement fluide de ces améliorations dans une configuration sans serveur, ce qui le rend idéal pour les flux de production et de médias.

Démonstrations en direct et expérimentations

Les développeurs peuvent rapidement itérer et déployer des démos grâce à un accès instantané aux modèles, permettant une expérimentation plus rapide sans provisionner l'infrastructure GPU.

Conçu pour les équipes d'IA modernes

GPUx bénéficie de la confiance d'un nombre croissant d'équipes souhaitant déployer l'IA rapidement, efficacement et en toute maîtrise. Que vous soyez une startup ou une grande entreprise, la plateforme s'adapte à vos besoins et vous offre une évolutivité sans complexité.